Speaker: Isacco Zappa (Politecnico di Milano)

July 8, 2024 | 11:45 a.m.

Emilio Gatti Conference Room

Contact: Prof. Simone Formentin | Research Line: Robotics and Industrial Automation

Sommario

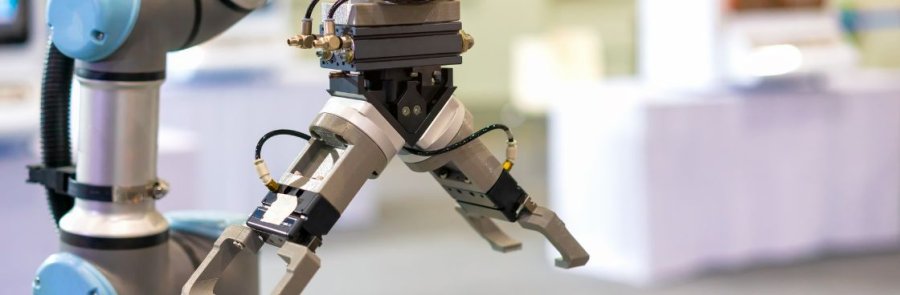

With the onset of the fourth industrial revolution, there has been a change of perspective on the role of robots in factories. Collaborative robots embody this change, offering companies smarter and interoperable solutions to automate production lines. Advances in cobot design and sensor technology have also enabled safe interaction with human operators and simplified, user-friendly programming.Despite the increasing market share, the need for field experts to configure cobots for different tasks still limits their widespread adoption, especially among small and medium-sized enterprises. Several strategies have been designed to provide intuitive methods to make cobot programming accessible to everyone. Nevertheless, even when demonstrations of the task are provided, the robot still perceives a task only as a series of movements and end-effector actions.

In this seminar, I will outline my research, which focuses on methods to deepen the cobot’s understanding of the skills taught by the operator through demonstrations. After a brief introduction to the industrial context, I will present my efforts in enriching the robot’s semantic understanding of the scene, how the action models are computed, and how to exploit the knowledge acquired by the robot from the demonstration.